| Commit message (Collapse) | Author | Age | Files | Lines |

|---|

| ... | |

| |

|

|

|

|

|

|

|

|

|

|

|

| |

spark.sql.tungsten.enabled will be the default value for both codegen and unsafe, they are kept internally for debug/testing.

cc marmbrus rxin

Author: Davies Liu <davies@databricks.com>

Closes #7998 from davies/tungsten and squashes the following commits:

c1c16da [Davies Liu] update doc

1a47be1 [Davies Liu] use tungsten.enabled for both of codegen/unsafe

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

https://issues.apache.org/jira/browse/SPARK-9691

jkbradley rxin

Author: Yin Huai <yhuai@databricks.com>

Closes #7999 from yhuai/pythonRand and squashes the following commits:

4187e0c [Yin Huai] Regression test.

a985ef9 [Yin Huai] Use "if seed is not None" instead "if seed" because "if seed" returns false when seed is 0.

|

| |

|

|

|

|

|

|

|

|

|

| |

Remove 2 defunct SBT download URLs and replace with the 1 known download URL. Also, use https.

Follow up on https://github.com/apache/spark/pull/7792

Author: Sean Owen <sowen@cloudera.com>

Closes #7956 from srowen/SPARK-9633 and squashes the following commits:

caa40bd [Sean Owen] Remove 2 defunct SBT download URLs and replace with the 1 known download URL. Also, use https.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Spark should not mess with the permissions of directories created

by the cluster manager. Here, by setting the block manager dir

permissions to 700, the shuffle service (running as the YARN user)

wouldn't be able to serve shuffle files created by applications.

Also, the code to protect the local app dir was missing in standalone's

Worker; that has been now added. Since all processes run as the same

user in standalone, `chmod 700` should not cause problems.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes #7966 from vanzin/SPARK-9645 and squashes the following commits:

6e07b31 [Marcelo Vanzin] Protect the app dir in standalone mode.

384ba6a [Marcelo Vanzin] [SPARK-9645] [yarn] [core] Allow shuffle service to read shuffle files.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

This is the followup of https://github.com/apache/spark/pull/7813. It renames `HybridUnsafeAggregationIterator` to `TungstenAggregationIterator` and makes it only work with `UnsafeRow`. Also, I add a `TungstenAggregate` that uses `TungstenAggregationIterator` and make `SortBasedAggregate` (renamed from `SortBasedAggregate`) only works with `SafeRow`.

Author: Yin Huai <yhuai@databricks.com>

Closes #7954 from yhuai/agg-followUp and squashes the following commits:

4d2f4fc [Yin Huai] Add comments and free map.

0d7ddb9 [Yin Huai] Add TungstenAggregationQueryWithControlledFallbackSuite to test fall back process.

91d69c2 [Yin Huai] Rename UnsafeHybridAggregationIterator to TungstenAggregateIteraotr and make it only work with UnsafeRow.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Because `JobScheduler.stop(false)` may set `eventLoop` to null when `JobHandler` is running, then it's possible that when `post` is called, `eventLoop` happens to null.

This PR fixed this bug and also set threads in `jobExecutor` to `daemon`.

Author: zsxwing <zsxwing@gmail.com>

Closes #7960 from zsxwing/fix-npe and squashes the following commits:

b0864c4 [zsxwing] Fix a potential NPE in Streaming JobScheduler

|

| |

|

|

|

|

|

|

|

|

| |

…tually visible

Author: cody koeninger <cody@koeninger.org>

Closes #7995 from koeninger/doc-fixes and squashes the following commits:

87af9ea [cody koeninger] [Docs][Streaming] make the existing parameter docs for OffsetRange actually visible

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

robust and make all BlockGenerators subscribe to rate limit updates

In some receivers, instead of using the default `BlockGenerator` in `ReceiverSupervisorImpl`, custom generator with their custom listeners are used for reliability (see [`ReliableKafkaReceiver`](https://github.com/apache/spark/blob/master/external/kafka/src/main/scala/org/apache/spark/streaming/kafka/ReliableKafkaReceiver.scala#L99) and [updated `KinesisReceiver`](https://github.com/apache/spark/pull/7825/files)). These custom generators do not receive rate updates. This PR modifies the code to allow custom `BlockGenerator`s to be created through the `ReceiverSupervisorImpl` so that they can be kept track and rate updates can be applied.

In the process, I did some simplification, and de-flaki-fication of some rate controller related tests. In particular.

- Renamed `Receiver.executor` to `Receiver.supervisor` (to match `ReceiverSupervisor`)

- Made `RateControllerSuite` faster (by increasing batch interval) and less flaky

- Changed a few internal API to return the current rate of block generators as Long instead of Option\[Long\] (was inconsistent at places).

- Updated existing `ReceiverTrackerSuite` to test that custom block generators get rate updates as well.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes #7913 from tdas/SPARK-9556 and squashes the following commits:

41d4461 [Tathagata Das] fix scala style

eb9fd59 [Tathagata Das] Updated kinesis receiver

d24994d [Tathagata Das] Updated BlockGeneratorSuite to use manual clock in BlockGenerator

d70608b [Tathagata Das] Updated BlockGenerator with states and proper synchronization

f6bd47e [Tathagata Das] Merge remote-tracking branch 'apache-github/master' into SPARK-9556

31da173 [Tathagata Das] Fix bug

12116df [Tathagata Das] Add BlockGeneratorSuite

74bd069 [Tathagata Das] Fix style

989bb5c [Tathagata Das] Made BlockGenerator fail is used after stop, and added better unit tests for it

3ff618c [Tathagata Das] Fix test

b40eff8 [Tathagata Das] slight refactoring

f0df0f1 [Tathagata Das] Scala style fixes

51759cb [Tathagata Das] Refactored rate controller tests and added the ability to update rate of any custom block generator

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This pull request adds a destructive iterator to BytesToBytesMap. When used, the iterator frees pages as it traverses them. This is part of the effort to avoid starving when we have more than one operators that can exhaust memory.

This is based on #7924, but fixes a bug there (Don't use destructive iterator in UnsafeKVExternalSorter).

Closes #7924.

Author: Liang-Chi Hsieh <viirya@appier.com>

Author: Reynold Xin <rxin@databricks.com>

Closes #8003 from rxin/map-destructive-iterator and squashes the following commits:

6b618c3 [Reynold Xin] Don't use destructive iterator in UnsafeKVExternalSorter.

a7bd8ec [Reynold Xin] Merge remote-tracking branch 'viirya/destructive_iter' into map-destructive-iterator

7652083 [Liang-Chi Hsieh] For comments: add destructiveIterator(), modify unit test, remove code block.

4a3e9de [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into destructive_iter

581e9e3 [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into destructive_iter

f0ff783 [Liang-Chi Hsieh] No need to free last page.

9e9d2a3 [Liang-Chi Hsieh] Add a destructive iterator for BytesToBytesMap.

|

| |

|

|

|

|

|

|

|

|

|

| |

The golden answer file names for the existing Hive comparison tests were generated using a MD5 hash of the query text which uses Unix-style line separator characters `\n` (LF).

This PR ensures that all occurrences of the Windows-style line separator `\r\n` (CR) are replaced with `\n` (LF) before generating the MD5 hash to produce an identical MD5 hash for golden answer file names generated on Windows.

Author: Christian Kadner <ckadner@us.ibm.com>

Closes #7563 from ckadner/SPARK-9211_working and squashes the following commits:

d541db0 [Christian Kadner] [SPARK-9211][SQL] normalize line separators before MD5 hash

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

IsotonicRegression

This PR contains the following changes:

* add `featureIndex` to handle vector features (in order to chain isotonic regression easily with output from logistic regression

* make getter/setter names consistent with params

* remove inheritance from Regressor because it is tricky to handle both `DoubleType` and `VectorType`

* simplify test data generation

jkbradley zapletal-martin

Author: Xiangrui Meng <meng@databricks.com>

Closes #7952 from mengxr/SPARK-9493 and squashes the following commits:

8818ac3 [Xiangrui Meng] address comments

05e2216 [Xiangrui Meng] address comments

8d08090 [Xiangrui Meng] add featureIndex to handle vector features make getter/setter names consistent with params remove inheritance from Regressor

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This re-applies #7955, which was reverted due to a race condition to fix build breaking.

Author: Wenchen Fan <cloud0fan@outlook.com>

Author: Reynold Xin <rxin@databricks.com>

Closes #8002 from rxin/InternalRow-toSeq and squashes the following commits:

332416a [Reynold Xin] Merge pull request #7955 from cloud-fan/toSeq

21665e2 [Wenchen Fan] fix hive again...

4addf29 [Wenchen Fan] fix hive

bc16c59 [Wenchen Fan] minor fix

33d802c [Wenchen Fan] pass data type info to InternalRow.toSeq

3dd033e [Wenchen Fan] move the default special getters implementation from InternalRow to BaseGenericInternalRow

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Author: Dean Wampler <dean@concurrentthought.com>

Author: Nilanjan Raychaudhuri <nraychaudhuri@gmail.com>

Author: François Garillot <francois@garillot.net>

Closes #7796 from dragos/topic/streaming-bp/kafka-direct and squashes the following commits:

50d1f21 [Nilanjan Raychaudhuri] Taking care of the remaining nits

648c8b1 [Dean Wampler] Refactored rate controller test to be more predictable and run faster.

e43f678 [Nilanjan Raychaudhuri] fixing doc and nits

ce19d2a [Dean Wampler] Removing an unreliable assertion.

9615320 [Dean Wampler] Give me a break...

6372478 [Dean Wampler] Found a few ways to make this test more robust...

9e69e37 [Dean Wampler] Attempt to fix flakey test that fails in CI, but not locally :(

d3db1ea [Dean Wampler] Fixing stylecheck errors.

d04a288 [Nilanjan Raychaudhuri] adding test to make sure rate controller is used to calculate maxMessagesPerPartition

b6ecb67 [Nilanjan Raychaudhuri] Fixed styling issue

3110267 [Nilanjan Raychaudhuri] [SPARK-8978][Streaming] Implements the DirectKafkaRateController

393c580 [François Garillot] [SPARK-8978][Streaming] Implements the DirectKafkaRateController

51e78c6 [Nilanjan Raychaudhuri] Rename and fix build failure

2795509 [Nilanjan Raychaudhuri] Added missing RateController

19200f5 [Dean Wampler] Removed usage of infix notation. Changed a private variable name to be more consistent with usage.

aa4a70b [François Garillot] [SPARK-8978][Streaming] Implements the DirectKafkaController

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

| |

Document spark.shuffle.service.{enabled,port}

CC sryza tgravescs

This is pretty minimal; is there more to say here about the service?

Author: Sean Owen <sowen@cloudera.com>

Closes #7991 from srowen/SPARK-9641 and squashes the following commits:

3bb946e [Sean Owen] Add link to docs for setup and config of external shuffle service

2302e01 [Sean Owen] Document spark.shuffle.service.{enabled,port}

|

| |

|

|

|

|

|

|

|

|

| |

seems https://github.com/apache/spark/pull/7955 breaks the build.

Author: Yin Huai <yhuai@databricks.com>

Closes #8001 from yhuai/SPARK-9632-fixBuild and squashes the following commits:

6c257dd [Yin Huai] Fix build.

|

| |

|

|

|

|

| |

type info"

This reverts commit 6e009cb9c4d7a395991e10dab427f37019283758.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Author: Wenchen Fan <cloud0fan@outlook.com>

Closes #7955 from cloud-fan/toSeq and squashes the following commits:

21665e2 [Wenchen Fan] fix hive again...

4addf29 [Wenchen Fan] fix hive

bc16c59 [Wenchen Fan] minor fix

33d802c [Wenchen Fan] pass data type info to InternalRow.toSeq

3dd033e [Wenchen Fan] move the default special getters implementation from InternalRow to BaseGenericInternalRow

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

Inspiration drawn from this blog post: https://lab.getbase.com/pandarize-spark-dataframes/

Author: Reynold Xin <rxin@databricks.com>

Closes #7977 from rxin/isin and squashes the following commits:

9b1d3d6 [Reynold Xin] Added return.

2197d37 [Reynold Xin] Fixed test case.

7c1b6cf [Reynold Xin] Import warnings.

4f4a35d [Reynold Xin] [SPARK-9659][SQL] Rename inSet to isin to match Pandas function.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

merging and with Tungsten

In short:

1- FrequentItems should not use the InternalRow representation, because the keys in the map get messed up. For example, every key in the Map correspond to the very last element observed in the partition, when the elements are strings.

2- Merging two partitions had a bug:

**Existing behavior with size 3**

Partition A -> Map(1 -> 3, 2 -> 3, 3 -> 4)

Partition B -> Map(4 -> 25)

Result -> Map()

**Correct Behavior:**

Partition A -> Map(1 -> 3, 2 -> 3, 3 -> 4)

Partition B -> Map(4 -> 25)

Result -> Map(3 -> 1, 4 -> 22)

cc mengxr rxin JoshRosen

Author: Burak Yavuz <brkyvz@gmail.com>

Closes #7945 from brkyvz/freq-fix and squashes the following commits:

07fa001 [Burak Yavuz] address 2

1dc61a8 [Burak Yavuz] address 1

506753e [Burak Yavuz] fixed and added reg test

47bfd50 [Burak Yavuz] pushing

|

| |

|

|

|

|

|

|

|

|

|

| |

After https://github.com/apache/spark/pull/7263 it is pretty straightforward to Python wrappers.

Author: MechCoder <manojkumarsivaraj334@gmail.com>

Closes #7930 from MechCoder/spark-9533 and squashes the following commits:

1bea394 [MechCoder] make getVectors a lazy val

5522756 [MechCoder] [SPARK-9533] [PySpark] [ML] Add missing methods in Word2Vec ML

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

I have added support for stats in LogisticRegression. The API is similar to that of LinearRegression with LogisticRegressionTrainingSummary and LogisticRegressionSummary

I have some queries and asked them inline.

Author: MechCoder <manojkumarsivaraj334@gmail.com>

Closes #7538 from MechCoder/log_reg_stats and squashes the following commits:

2e9f7c7 [MechCoder] Change defs into lazy vals

d775371 [MechCoder] Clean up class inheritance

9586125 [MechCoder] Add abstraction to handle Multiclass Metrics

40ad8ef [MechCoder] minor

640376a [MechCoder] remove unnecessary dataframe stuff and add docs

80d9954 [MechCoder] Added tests

fbed861 [MechCoder] DataFrame support for metrics

70a0fc4 [MechCoder] [SPARK-9112] [ML] Implement Stats for LogisticRegression

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This is a follow-up of #7929.

We found that Jenkins SBT master build still fails because of the Hadoop shims loading issue. But the failure doesn't appear to be deterministic. My suspect is that Hadoop `VersionInfo` class may fail to inspect Hadoop version, and the shims loading branch is skipped.

This PR tries to make the fix more robust:

1. When Hadoop version is available, we load `Hadoop20SShims` for versions <= 2.0.x as srowen suggested in PR #7929.

2. Otherwise, we use `Path.getPathWithoutSchemeAndAuthority` as a probe method, which doesn't exist in Hadoop 1.x or 2.0.x. If this method is not found, `Hadoop20SShims` is also loaded.

Author: Cheng Lian <lian@databricks.com>

Closes #7994 from liancheng/spark-9593/fix-hadoop-shims and squashes the following commits:

e1d3d70 [Cheng Lian] Fixes typo in comments

8d971da [Cheng Lian] Makes the Hadoop shims loading fix more robust

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR also change to use `def` instead of `lazy val` for UnsafeProjection, because it's not thread safe.

TODO: cleanup the debug code once the flaky test passed 100 times.

Author: Davies Liu <davies@databricks.com>

Closes #7940 from davies/semijoin and squashes the following commits:

93baac7 [Davies Liu] fix outerjoin

5c40ded [Davies Liu] address comments

aa3de46 [Davies Liu] Merge branch 'master' of github.com:apache/spark into semijoin

7590a25 [Davies Liu] Merge branch 'master' of github.com:apache/spark into semijoin

2d4085b [Davies Liu] use def for resultProjection

0833407 [Davies Liu] Merge branch 'semijoin' of github.com:davies/spark into semijoin

e0d8c71 [Davies Liu] use lazy val

6a59e8f [Davies Liu] Update HashedRelation.scala

0fdacaf [Davies Liu] fix broadcast and thread-safety of UnsafeProjection

2fc3ef6 [Davies Liu] reproduce failure in semijoin

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

In order to support update a varlength (actually fixed length) object, the space should be preserved even it's null. And, we can't call setNullAt(i) for it anymore, we because setNullAt(i) will remove the offset of the preserved space, should call setDecimal(i, null, precision) instead.

After this, we can do hash based aggregation on DecimalType with precision > 18. In a tests, this could decrease the end-to-end run time of aggregation query from 37 seconds (sort based) to 24 seconds (hash based).

cc rxin

Author: Davies Liu <davies@databricks.com>

Closes #7978 from davies/update_decimal and squashes the following commits:

bed8100 [Davies Liu] isSettable -> isMutable

923c9eb [Davies Liu] address comments and fix bug

385891d [Davies Liu] Merge branch 'master' of github.com:apache/spark into update_decimal

36a1872 [Davies Liu] fix tests

cd6c524 [Davies Liu] support set decimal with precision > 18

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

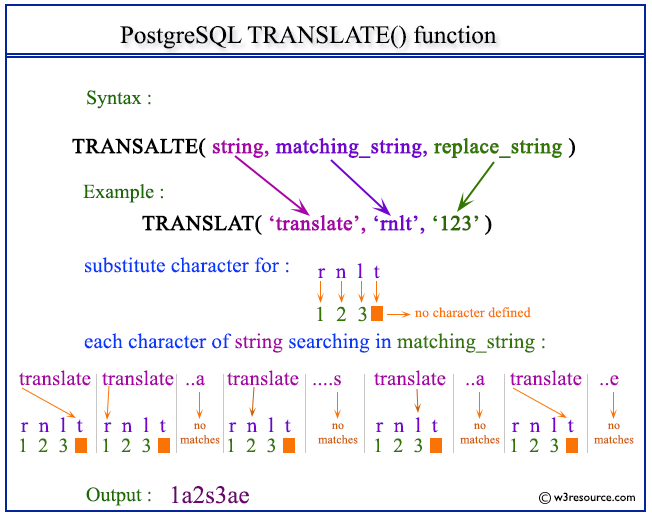

Author: zhichao.li <zhichao.li@intel.com>

Closes #7709 from zhichao-li/translate and squashes the following commits:

9418088 [zhichao.li] refine checking condition

f2ab77a [zhichao.li] clone string

9d88f2d [zhichao.li] fix indent

6aa2962 [zhichao.li] style

e575ead [zhichao.li] add python api

9d4bab0 [zhichao.li] add special case for fodable and refactor unittest

eda7ad6 [zhichao.li] update to use TernaryExpression

cdfd4be [zhichao.li] add function translate

|

| |

|

|

|

|

|

|

|

|

|

|

| |

UserDefinedAggregateFunction.

https://issues.apache.org/jira/browse/SPARK-9664

Author: Yin Huai <yhuai@databricks.com>

Closes #7982 from yhuai/udafRegister and squashes the following commits:

0cc2287 [Yin Huai] Remove UDAFRegistration and add apply to UserDefinedAggregateFunction.

|

| |

|

|

|

|

|

|

|

|

| |

The new aggregate replaces the old GeneratedAggregate.

Author: Reynold Xin <rxin@databricks.com>

Closes #7983 from rxin/remove-generated-agg and squashes the following commits:

8334aae [Reynold Xin] [SPARK-9674][SQL] Remove GeneratedAggregate.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

compatible format when possible

This PR is a fork of PR #5733 authored by chenghao-intel. For committers who's going to merge this PR, please set the author to "Cheng Hao <hao.chengintel.com>".

----

When a data source relation meets the following requirements, we persist it in Hive compatible format, so that other systems like Hive can access it:

1. It's a `HadoopFsRelation`

2. It has only one input path

3. It's non-partitioned

4. It's data source provider can be naturally mapped to a Hive builtin SerDe (e.g. ORC and Parquet)

Author: Cheng Lian <lian@databricks.com>

Author: Cheng Hao <hao.cheng@intel.com>

Closes #7967 from liancheng/spark-6923/refactoring-pr-5733 and squashes the following commits:

5175ee6 [Cheng Lian] Fixes an oudated comment

3870166 [Cheng Lian] Fixes build error and comments

864acee [Cheng Lian] Refactors PR #5733

3490cdc [Cheng Hao] update the scaladoc

6f57669 [Cheng Hao] write schema info to hivemetastore for data source

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

UnsafeFixedWidthAggregationMap

This PR has the following three small fixes.

1. UnsafeKVExternalSorter does not use 0 as the initialSize to create an UnsafeInMemorySorter if its BytesToBytesMap is empty.

2. We will not not spill a InMemorySorter if it is empty.

3. We will not add a SpillReader to a SpillMerger if this SpillReader is empty.

JIRA: https://issues.apache.org/jira/browse/SPARK-9611

Author: Yin Huai <yhuai@databricks.com>

Closes #7948 from yhuai/unsafeEmptyMap and squashes the following commits:

9727abe [Yin Huai] Address Josh's comments.

34b6f76 [Yin Huai] 1. UnsafeKVExternalSorter does not use 0 as the initialSize to create an UnsafeInMemorySorter if its BytesToBytesMap is empty. 2. Do not spill a InMemorySorter if it is empty. 3. Do not add spill to SpillMerger if this spill is empty.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

First, it's probably a bad idea to call generated Scala methods

from Java. In this case, the method being called wasn't actually

"Utils.createTempDir()", but actually the method that returns the

first default argument to the actual createTempDir method, which

is just the location of java.io.tmpdir; meaning that all tests in

the class were using the same temp dir, and thus affecting each

other.

Second, spillingOccursInResponseToMemoryPressure was not writing

enough records to actually cause a spill.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes #7970 from vanzin/SPARK-9651 and squashes the following commits:

74d357f [Marcelo Vanzin] Clean up temp dir on test tear down.

a64f36a [Marcelo Vanzin] [SPARK-9651] Fix UnsafeExternalSorterSuite.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

common types into strings

JIRA: https://issues.apache.org/jira/browse/SPARK-6591

Author: Yijie Shen <henry.yijieshen@gmail.com>

Closes #7926 from yjshen/py_dsload_opt and squashes the following commits:

b207832 [Yijie Shen] fix style

efdf834 [Yijie Shen] resolve comment

7a8f6a2 [Yijie Shen] lowercase

822e769 [Yijie Shen] convert load opts to string

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Add VectorSlicer transformer to spark.ml, with features specified as either indices or names. Transfers feature attributes for selected features.

Updated version of [https://github.com/apache/spark/pull/5731]

CC: yinxusen This updates your PR. You'll still be the primary author of this PR.

CC: mengxr

Author: Xusen Yin <yinxusen@gmail.com>

Author: Joseph K. Bradley <joseph@databricks.com>

Closes #7972 from jkbradley/yinxusen-SPARK-5895 and squashes the following commits:

b16e86e [Joseph K. Bradley] fixed scala style

71c65d2 [Joseph K. Bradley] fix import order

86e9739 [Joseph K. Bradley] cleanups per code review

9d8d6f1 [Joseph K. Bradley] style fix

83bc2e9 [Joseph K. Bradley] Updated VectorSlicer

98c6939 [Xusen Yin] fix style error

ecbf2d3 [Xusen Yin] change interfaces and params

f6be302 [Xusen Yin] Merge branch 'master' into SPARK-5895

e4781f2 [Xusen Yin] fix commit error

fd154d7 [Xusen Yin] add test suite of vector slicer

17171f8 [Xusen Yin] fix slicer

9ab9747 [Xusen Yin] add vector slicer

aa5a0bf [Xusen Yin] add vector slicer

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

newOrdering in SMJ

This patches renames `RowOrdering` to `InterpretedOrdering` and updates SortMergeJoin to use the `SparkPlan` methods for constructing its ordering so that it may benefit from codegen.

This is an updated version of #7408.

Author: Josh Rosen <joshrosen@databricks.com>

Closes #7973 from JoshRosen/SPARK-9054 and squashes the following commits:

e610655 [Josh Rosen] Add comment RE: Ascending ordering

34b8e0c [Josh Rosen] Import ordering

be19a0f [Josh Rosen] [SPARK-9054] [SQL] Rename RowOrdering to InterpretedOrdering; use newOrdering in more places.

|

| |

|

|

|

|

|

|

|

|

| |

mengxr

Author: Feynman Liang <fliang@databricks.com>

Closes #7974 from feynmanliang/SPARK-9657 and squashes the following commits:

7ca533f [Feynman Liang] Fix return type of getMaxPatternLength

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

```

Error Message

Failed to bind to: /127.0.0.1:7093: Service 'sparkMaster' failed after 16 retries!

Stacktrace

java.net.BindException: Failed to bind to: /127.0.0.1:7093: Service 'sparkMaster' failed after 16 retries!

at org.jboss.netty.bootstrap.ServerBootstrap.bind(ServerBootstrap.java:272)

at akka.remote.transport.netty.NettyTransport$$anonfun$listen$1.apply(NettyTransport.scala:393)

at akka.remote.transport.netty.NettyTransport$$anonfun$listen$1.apply(NettyTransport.scala:389)

at scala.util.Success$$anonfun$map$1.apply(Try.scala:206)

at scala.util.Try$.apply(Try.scala:161)

```

Author: Andrew Or <andrew@databricks.com>

Closes #7968 from andrewor14/fix-master-flaky-test and squashes the following commits:

fcc42ef [Andrew Or] Randomize port

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This continues tarekauel's work in #7778.

Author: Liang-Chi Hsieh <viirya@appier.com>

Author: Tarek Auel <tarek.auel@googlemail.com>

Closes #7893 from viirya/codegen_in and squashes the following commits:

81ff97b [Liang-Chi Hsieh] For comments.

47761c6 [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into codegen_in

cf4bf41 [Liang-Chi Hsieh] For comments.

f532b3c [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into codegen_in

446bbcd [Liang-Chi Hsieh] Fix bug.

b3d0ab4 [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into codegen_in

4610eff [Liang-Chi Hsieh] Relax the types of references and update optimizer test.

224f18e [Liang-Chi Hsieh] Beef up the test cases for In and InSet to include all primitive data types.

86dc8aa [Liang-Chi Hsieh] Only convert In to InSet when the number of items in set is more than the threshold.

b7ded7e [Tarek Auel] [SPARK-9403][SQL] codeGen in / inSet

|

| |

|

|

|

|

|

|

|

|

| |

This is a follow-up of https://github.com/apache/spark/pull/7920 to fix comments.

Author: Yin Huai <yhuai@databricks.com>

Closes #7964 from yhuai/SPARK-9141-follow-up and squashes the following commits:

4d0ee80 [Yin Huai] Fix comments.

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

Currently, when we kill application on Yarn, then will call sc.stop() at Yarn application state monitor thread, then in YarnClientSchedulerBackend.stop() will call interrupt this will cause SparkContext not stop fully as we will wait executor to exit.

Author: linweizhong <linweizhong@huawei.com>

Closes #7846 from Sephiroth-Lin/SPARK-9519 and squashes the following commits:

1ae736d [linweizhong] Update comments

2e8e365 [linweizhong] Add comment explaining the code

ad0e23b [linweizhong] Update

243d2c7 [linweizhong] Confirm stop sc successfully when application was killed

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Currently we collapse successive projections that are added by `withColumn`. However, this optimization violates the constraint that adding nodes to a plan will never change its analyzed form and thus breaks caching. Instead of doing early optimization, in this PR I just fix some low-hanging slowness in the analyzer. In particular, I add a mechanism for skipping already analyzed subplans, `resolveOperators` and `resolveExpression`. Since trees are generally immutable after construction, it's safe to annotate a plan as already analyzed as any transformation will create a new tree with this bit no longer set.

Together these result in a faster analyzer than before, even with added timing instrumentation.

```

Original Code

[info] 3430ms

[info] 2205ms

[info] 1973ms

[info] 1982ms

[info] 1916ms

Without Project Collapsing in DataFrame

[info] 44610ms

[info] 45977ms

[info] 46423ms

[info] 46306ms

[info] 54723ms

With analyzer optimizations

[info] 6394ms

[info] 4630ms

[info] 4388ms

[info] 4093ms

[info] 4113ms

With resolveOperators

[info] 2495ms

[info] 1380ms

[info] 1685ms

[info] 1414ms

[info] 1240ms

```

Author: Michael Armbrust <michael@databricks.com>

Closes #7920 from marmbrus/withColumnCache and squashes the following commits:

2145031 [Michael Armbrust] fix hive udfs tests

5a5a525 [Michael Armbrust] remove wrong comment

7a507d5 [Michael Armbrust] style

b59d710 [Michael Armbrust] revert small change

1fa5949 [Michael Armbrust] move logic into LogicalPlan, add tests

0e2cb43 [Michael Armbrust] Merge remote-tracking branch 'origin/master' into withColumnCache

c926e24 [Michael Armbrust] naming

e593a2d [Michael Armbrust] style

f5a929e [Michael Armbrust] [SPARK-9141][SQL] Remove project collapsing from DataFrame API

38b1c83 [Michael Armbrust] WIP

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

mengxr This adds the `BlockMatrix` to PySpark. I have the conversions to `IndexedRowMatrix` and `CoordinateMatrix` ready as well, so once PR #7554 is completed (which relies on PR #7746), this PR can be finished.

Author: Mike Dusenberry <mwdusenb@us.ibm.com>

Closes #7761 from dusenberrymw/SPARK-6486_Add_BlockMatrix_to_PySpark and squashes the following commits:

27195c2 [Mike Dusenberry] Adding one more check to _convert_to_matrix_block_tuple, and a few minor documentation changes.

ae50883 [Mike Dusenberry] Minor update: BlockMatrix should inherit from DistributedMatrix.

b8acc1c [Mike Dusenberry] Moving BlockMatrix to pyspark.mllib.linalg.distributed, updating the logic to match that of the other distributed matrices, adding conversions, and adding documentation.

c014002 [Mike Dusenberry] Using properties for better documentation.

3bda6ab [Mike Dusenberry] Adding documentation.

8fb3095 [Mike Dusenberry] Small cleanup.

e17af2e [Mike Dusenberry] Adding BlockMatrix to PySpark.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Support partitioning for the JSON data source.

Still 2 open issues for the `HadoopFsRelation`

- `refresh()` will invoke the `discoveryPartition()`, which will auto infer the data type for the partition columns, and maybe conflict with the given partition columns. (TODO enable `HadoopFsRelationSuite.Partition column type casting"

- When insert data into a cached HadoopFsRelation based table, we need to invalidate the cache after the insertion (TODO enable `InsertSuite.Caching`)

Author: Cheng Hao <hao.cheng@intel.com>

Closes #7696 from chenghao-intel/json and squashes the following commits:

d90b104 [Cheng Hao] revert the change for JacksonGenerator.apply

307111d [Cheng Hao] fix bug in the unit test

8738c8a [Cheng Hao] fix bug in unit testing

35f2cde [Cheng Hao] support partition for json format

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

The user specified schema is currently ignored when loading Parquet files.

One workaround is to use the `format` and `load` methods instead of `parquet`, e.g.:

```

val schema = ???

// schema is ignored

sqlContext.read.schema(schema).parquet("hdfs:///test")

// schema is retained

sqlContext.read.schema(schema).format("parquet").load("hdfs:///test")

```

The fix is simple, but I wonder if the `parquet` method should instead be written in a similar fashion to `orc`:

```

def parquet(path: String): DataFrame = format("parquet").load(path)

```

Author: Nathan Howell <nhowell@godaddy.com>

Closes #7947 from NathanHowell/SPARK-9618 and squashes the following commits:

d1ea62c [Nathan Howell] [SPARK-9618] [SQL] Use the specified schema when reading Parquet files

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR is used to workaround CDH Hadoop versions like 2.0.0-mr1-cdh4.1.1.

Internally, Hive `ShimLoader` tries to load different versions of Hadoop shims by checking version information gathered from Hadoop jar files. If the major version number is 1, `Hadoop20SShims` will be loaded. Otherwise, if the major version number is 2, `Hadoop23Shims` will be chosen. However, CDH Hadoop versions like 2.0.0-mr1-cdh4.1.1 have 2 as major version number, but contain Hadoop 1 code. This confuses Hive `ShimLoader` and loads wrong version of shims.

In this PR we check for existence of the `Path.getPathWithoutSchemeAndAuthority` method, which doesn't exist in Hadoop 1 (it's also the method that reveals this shims loading issue), and load `Hadoop20SShims` when it doesn't exist.

Author: Cheng Lian <lian@databricks.com>

Closes #7929 from liancheng/spark-9593/fix-hadoop-shims-loading and squashes the following commits:

c99b497 [Cheng Lian] Narrows down the fix to handle "2.0.0-*cdh4*" Hadoop versions only

b17e955 [Cheng Lian] Updates comments

490d8f2 [Cheng Lian] Fixes Scala style issue

9c6c12d [Cheng Lian] Fixes Hadoop shims loading

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

- pass `$ZINC_PORT` to zinc status/shutdown commands

- fix path check that sets `$ZINC_INSTALL_FLAG`, which was incorrectly

causing zinc to be shutdown and restarted every time (with mismatched

ports on those commands to boot)

- pass `-DzincPort=${ZINC_PORT}` to maven, to use the correct zinc port

when building

Author: Ryan Williams <ryan.blake.williams@gmail.com>

Closes #7944 from ryan-williams/zinc-status and squashes the following commits:

619c520 [Ryan Williams] fix zinc status/shutdown commands

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

| |

readability

JIRA: https://issues.apache.org/jira/browse/SPARK-9628

Author: Yijie Shen <henry.yijieshen@gmail.com>

Closes #7953 from yjshen/datetime_alias and squashes the following commits:

3cac3cc [Yijie Shen] rename int to SQLDate, long to SQLTimestamp for better readability

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

operator and add a new SQL tab

This PR includes the following changes:

### SPARK-8862: Add basic instrumentation to each SparkPlan operator

A SparkPlan can override `def accumulators: Map[String, Accumulator[_]]` to expose its metrics that can be displayed in UI. The UI will use them to track the updates and show them in the web page in real-time.

### SparkSQLExecution and SQLSparkListener

`SparkSQLExecution.withNewExecutionId` will set `spark.sql.execution.id` to the local properties so that we can use it to track all jobs that belong to the same query.

SQLSparkListener is a listener to track all accumulator updates of all tasks for a query. It receives them from heartbeats can the UI can query them in real-time.

When running a query, `SQLSparkListener.onExecutionStart` will be called. When a query is finished, `SQLSparkListener.onExecutionEnd` will be called. And the Spark jobs with the same execution id will be tracked and stored with this query.

`SQLSparkListener` has to store all accumulator updates for tasks separately. When a task fails and starts to retry, we need to drop the old accumulator updates. Because we can not revert our changes to an accumulator, we have to maintain these accumulator updates by ourselves so as to drop accumulator updates for a failed task.

### SPARK-8862: A new SQL tab

Includes two pages:

#### A page for all DataFrame/SQL queries

It will show the running, completed and failed queries in 3 tables. It also displays the jobs and their links for a query in each row.

#### A detail page for a DataFrame/SQL query

In this page, it also shows the SparkPlan metrics in real-time. Run a long-running query, such as

```

val testData = sc.parallelize((1 to 1000000).map(i => (i, i.toString))).toDF()

testData.select($"_1").filter($"_1" < 1000).foreach(_ => Thread.sleep(60))

```

and you will see the metrics keep updating in real-time.

<!-- Reviewable:start -->

[<img src="https://reviewable.io/review_button.png" height=40 alt="Review on Reviewable"/>](https://reviewable.io/reviews/apache/spark/7774)

<!-- Reviewable:end -->

Author: zsxwing <zsxwing@gmail.com>

Closes #7774 from zsxwing/sql-ui and squashes the following commits:

5a2bc99 [zsxwing] Remove UISeleniumSuite and its dependency

57d4cd2 [zsxwing] Use VisibleForTesting annotation

cc1c736 [zsxwing] Add SparkPlan.trackNumOfRowsEnabled to make subclasses easy to track the number of rows; fix the issue that the "save" action cannot collect metrics

3771ab0 [zsxwing] Register SQL metrics accmulators

3a101c0 [zsxwing] Change prepareCalled's type to AtomicBoolean for thread-safety

b8d5605 [zsxwing] Make prepare idempotent; call children's prepare in SparkPlan.prepare; change doPrepare to def

4ed11a1 [zsxwing] var -> val

332639c [zsxwing] Ignore UISeleniumSuite and SQLListenerSuite."no memory leak" because of SPARK-9580

bb52359 [zsxwing] Address other commens in SQLListener

c4d0f5d [zsxwing] Move newPredicate out of the iterator loop

957473c [zsxwing] Move STATIC_RESOURCE_DIR to object SQLTab

7ab4816 [zsxwing] Make SparkPlan accumulator API private[sql]

dae195e [zsxwing] Fix the code style and comments

3a66207 [zsxwing] Ignore irrelevant accumulators

b8484a1 [zsxwing] Merge branch 'master' into sql-ui

9406592 [zsxwing] Implement the SparkPlan viz

4ebce68 [zsxwing] Add SparkPlan.prepare to support BroadcastHashJoin to run background work in parallel

ca1811f [zsxwing] Merge branch 'master' into sql-ui

fef6fc6 [zsxwing] Fix a corner case

25f335c [zsxwing] Fix the code style

6eae828 [zsxwing] SQLSparkListener -> SQLListener; SparkSQLExecutionUIData -> SQLExecutionUIData; SparkSQLExecution -> SQLExecution

822af75 [zsxwing] Add SQLSparkListenerSuite and fix the issue about onExecutionEnd and onJobEnd

6be626f [zsxwing] Add UISeleniumSuite to test UI

d02a24d [zsxwing] Make ExecutionPage private

23abf73 [zsxwing] [SPARK-8862][SPARK-8862][SQL] Add basic instrumentation to each SparkPlan operator and add a new SQL tab

|

| |

|

|

|

|

|

|

|

|

| |

windowed join in streaming guide doc

Author: Namit Katariya <katariya.namit@gmail.com>

Closes #7935 from namitk/SPARK-9601 and squashes the following commits:

03b5784 [Namit Katariya] [SPARK-9601] Fix signature of JavaPairDStream for stream-stream and windowed join in streaming guide doc

|