| Commit message (Collapse) | Author | Age | Files | Lines |

|---|

| |

|

|

|

|

| |

run-tests.py."

This reverts commit 8abef21dac1a6538c4e4e0140323b83d804d602b.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This change does two things:

- tag a few tests and adds the mechanism in the build to be able to disable those tags,

both in maven and sbt, for both junit and scalatest suites.

- add some logic to run-tests.py to disable some tags depending on what files have

changed; that's used to disable expensive tests when a module hasn't explicitly

been changed, to speed up testing for changes that don't directly affect those

modules.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes #8437 from vanzin/test-tags.

|

| |

|

|

|

|

| |

Author: Reynold Xin <rxin@databricks.com>

Closes #8350 from rxin/1.6.

|

| |

|

|

|

|

|

|

| |

CC rxin marmbrus

Author: Feynman Liang <fliang@databricks.com>

Closes #8523 from feynmanliang/SPARK-10351.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

In UnsafeRow, we use the private field of BigInteger for better performance, but it actually didn't contribute much (3% in one benchmark) to end-to-end runtime, and make it not portable (may fail on other JVM implementations).

So we should use the public API instead.

cc rxin

Author: Davies Liu <davies@databricks.com>

Closes #8286 from davies/portable_decimal.

|

| |

|

|

|

|

|

|

| |

Tiny modification to a few comments ```sbt publishLocal``` work again.

Author: Herman van Hovell <hvanhovell@questtec.nl>

Closes #8209 from hvanhovell/SPARK-9980.

|

| |

|

|

|

|

|

|

| |

The BYTE_ARRAY_OFFSET could be different in JVM with different configurations (for example, different heap size, 24 if heap > 32G, otherwise 16), so offset of UTF8String is not portable, we should handler that during serialization.

Author: Davies Liu <davies@databricks.com>

Closes #8210 from davies/serialize_utf8string.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Currently, we access the `page.pageNumer` after it's freed, that could be modified by other thread, cause NPE.

The same TaskMemoryManager could be used by multiple threads (for example, Python UDF and TransportScript), so it should be thread safe to allocate/free memory/page. The underlying Bitset and HashSet are not thread safe, we should put them inside a synchronized block.

cc JoshRosen

Author: Davies Liu <davies@databricks.com>

Closes #8177 from davies/memory_manager.

|

| |

|

|

|

|

|

|

|

|

| |

PlatformDependent.UNSAFE is way too verbose.

Author: Reynold Xin <rxin@databricks.com>

Closes #8094 from rxin/SPARK-9815 and squashes the following commits:

229b603 [Reynold Xin] [SPARK-9815] Rename PlatformDependent.UNSAFE -> Platform.

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

This PR enables converting interval term in HiveQL to CalendarInterval Literal.

JIRA: https://issues.apache.org/jira/browse/SPARK-9728

Author: Yijie Shen <henry.yijieshen@gmail.com>

Closes #8034 from yjshen/interval_hiveql and squashes the following commits:

7fe9a5e [Yijie Shen] declare throw exception and add unit test

fce7795 [Yijie Shen] convert hiveql interval term into CalendarInterval literal

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Previously, we use 64MB as the default page size, which was way too big for a lot of Spark applications (especially for single node).

This patch changes it so that the default page size, if unset by the user, is determined by the number of cores available and the total execution memory available.

Author: Reynold Xin <rxin@databricks.com>

Closes #8012 from rxin/pagesize and squashes the following commits:

16f4756 [Reynold Xin] Fixed failing test.

5afd570 [Reynold Xin] private...

0d5fb98 [Reynold Xin] Update default value.

674a6cd [Reynold Xin] Address review feedback.

dc00e05 [Reynold Xin] Merge with master.

73ebdb6 [Reynold Xin] [SPARK-9700] Pick default page size more intelligently.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

In order to support update a varlength (actually fixed length) object, the space should be preserved even it's null. And, we can't call setNullAt(i) for it anymore, we because setNullAt(i) will remove the offset of the preserved space, should call setDecimal(i, null, precision) instead.

After this, we can do hash based aggregation on DecimalType with precision > 18. In a tests, this could decrease the end-to-end run time of aggregation query from 37 seconds (sort based) to 24 seconds (hash based).

cc rxin

Author: Davies Liu <davies@databricks.com>

Closes #7978 from davies/update_decimal and squashes the following commits:

bed8100 [Davies Liu] isSettable -> isMutable

923c9eb [Davies Liu] address comments and fix bug

385891d [Davies Liu] Merge branch 'master' of github.com:apache/spark into update_decimal

36a1872 [Davies Liu] fix tests

cd6c524 [Davies Liu] support set decimal with precision > 18

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

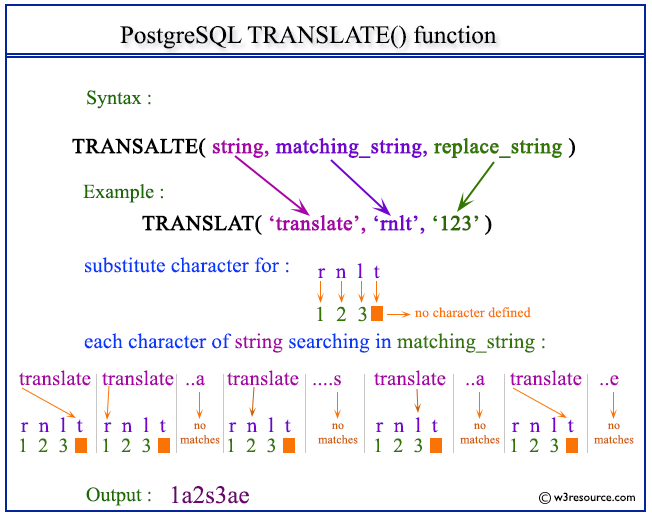

Author: zhichao.li <zhichao.li@intel.com>

Closes #7709 from zhichao-li/translate and squashes the following commits:

9418088 [zhichao.li] refine checking condition

f2ab77a [zhichao.li] clone string

9d88f2d [zhichao.li] fix indent

6aa2962 [zhichao.li] style

e575ead [zhichao.li] add python api

9d4bab0 [zhichao.li] add special case for fodable and refactor unittest

eda7ad6 [zhichao.li] update to use TernaryExpression

cdfd4be [zhichao.li] add function translate

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This patch extends UnsafeExternalSorter to support records larger than the page size. The basic strategy is the same as in #7762: store large records in their own overflow pages.

Author: Josh Rosen <joshrosen@databricks.com>

Closes #7891 from JoshRosen/large-records-in-sql-sorter and squashes the following commits:

967580b [Josh Rosen] Merge remote-tracking branch 'origin/master' into large-records-in-sql-sorter

948c344 [Josh Rosen] Add large records tests for KV sorter.

3c17288 [Josh Rosen] Combine memory and disk cleanup into general cleanupResources() method

380f217 [Josh Rosen] Merge remote-tracking branch 'origin/master' into large-records-in-sql-sorter

27eafa0 [Josh Rosen] Fix page size in PackedRecordPointerSuite

a49baef [Josh Rosen] Address initial round of review comments

3edb931 [Josh Rosen] Remove accidentally-committed debug statements.

2b164e2 [Josh Rosen] Support large records in UnsafeExternalSorter.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR is based on #7186 (just fix the conflict), thanks to tarekauel .

find_in_set(string str, string strList): int

Returns the first occurance of str in strList where strList is a comma-delimited string. Returns null if either argument is null. Returns 0 if the first argument contains any commas. For example, find_in_set('ab', 'abc,b,ab,c,def') returns 3.

Only add this to SQL, not DataFrame.

Closes #7186

Author: Tarek Auel <tarek.auel@googlemail.com>

Author: Davies Liu <davies@databricks.com>

Closes #7900 from davies/find_in_set and squashes the following commits:

4334209 [Davies Liu] Merge branch 'master' of github.com:apache/spark into find_in_set

8f00572 [Davies Liu] Merge branch 'master' of github.com:apache/spark into find_in_set

243ede4 [Tarek Auel] [SPARK-8244][SQL] hive compatibility

1aaf64e [Tarek Auel] [SPARK-8244][SQL] unit test fix

e4093a4 [Tarek Auel] [SPARK-8244][SQL] final modifier for COMMA_UTF8

0d05df5 [Tarek Auel] Merge branch 'master' into SPARK-8244

208d710 [Tarek Auel] [SPARK-8244] address comments & bug fix

71b2e69 [Tarek Auel] [SPARK-8244] find_in_set

66c7fda [Tarek Auel] Merge branch 'master' into SPARK-8244

61b8ca2 [Tarek Auel] [SPARK-8224] removed loop and split; use unsafe String comparison

4f75a65 [Tarek Auel] Merge branch 'master' into SPARK-8244

e3b20c8 [Tarek Auel] [SPARK-8244] added type check

1c2bbb7 [Tarek Auel] [SPARK-8244] findInSet

|

| |

|

|

|

|

|

|

|

|

|

| |

Previous code assumed little-endian.

Author: Matthew Brandyberry <mbrandy@us.ibm.com>

Closes #7902 from mtbrandy/SPARK-9483 and squashes the following commits:

ec31df8 [Matthew Brandyberry] [SPARK-9483] Changes from review comments.

17d54c6 [Matthew Brandyberry] [SPARK-9483] Fix UTF8String.getPrefix for big-endian.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR adds a UnsafeArrayData, current we encode it in this way:

first 4 bytes is the # elements

then each 4 byte is the start offset of the element, unless it is negative, in which case the element is null.

followed by the elements themselves

an example: [10, 11, 12, 13, null, 14] will be encoded as:

5, 28, 32, 36, 40, -44, 44, 10, 11, 12, 13, 14

Note that, when we read a UnsafeArrayData from bytes, we can read the first 4 bytes as numElements and take the rest(first 4 bytes skipped) as value region.

unsafe map data just use 2 unsafe array data, first 4 bytes is # of elements, second 4 bytes is numBytes of key array, the follows key array data and value array data.

Author: Wenchen Fan <cloud0fan@outlook.com>

Closes #7752 from cloud-fan/unsafe-array and squashes the following commits:

3269bd7 [Wenchen Fan] fix a bug

6445289 [Wenchen Fan] add unit tests

49adf26 [Wenchen Fan] add unsafe map

20d1039 [Wenchen Fan] add comments and unsafe converter

821b8db [Wenchen Fan] add unsafe array

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

The detailed approach is documented in UnsafeKVExternalSorterSuite.testKVSorter(), working as follows:

1. Create input by generating data randomly based on the given key/value schema (which is also randomly drawn from a list of candidate types)

2. Run UnsafeKVExternalSorter on the generated data

3. Collect the output from the sorter, and make sure the keys are sorted in ascending order

4. Sort the input by both key and value, and sort the sorter output also by both key and value. Compare the sorted input and sorted output together to make sure all the key/values match.

5. Check memory allocation to make sure there is no memory leak.

There is also a spill flag. When set to true, the sorter will spill probabilistically roughly every 100 records.

Author: Reynold Xin <rxin@databricks.com>

Closes #7873 from rxin/kvsorter-randomized-test and squashes the following commits:

a08c251 [Reynold Xin] Resource cleanup.

0488b5c [Reynold Xin] [SPARK-9543][SQL] Add randomized testing for UnsafeKVExternalSorter.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

UTF8String and struct

When accessing a column in UnsafeRow, it's good to avoid the copy, then we should do deep copy when turn the UnsafeRow into generic Row, this PR brings generated FromUnsafeProjection to do that.

This PR also fix the expressions that cache the UTF8String, which should also copy it.

Author: Davies Liu <davies@databricks.com>

Closes #7840 from davies/avoid_copy and squashes the following commits:

230c8a1 [Davies Liu] address comment

fd797c9 [Davies Liu] Merge branch 'master' of github.com:apache/spark into avoid_copy

e095dd0 [Davies Liu] rollback rename

8ef5b0b [Davies Liu] copy String in Columnar

81360b8 [Davies Liu] fix class name

9aecb88 [Davies Liu] use FromUnsafeProjection to do deep copy for UTF8String and struct

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR is based on #7208 , thanks to HuJiayin

Closes #7208

Author: HuJiayin <jiayin.hu@intel.com>

Author: Davies Liu <davies@databricks.com>

Closes #7850 from davies/initcap and squashes the following commits:

54472e9 [Davies Liu] fix python test

17ffe51 [Davies Liu] Merge branch 'master' of github.com:apache/spark into initcap

ca46390 [Davies Liu] Merge branch 'master' of github.com:apache/spark into initcap

3a906e4 [Davies Liu] implement title case in UTF8String

8b2506a [HuJiayin] Update functions.py

2cd43e5 [HuJiayin] fix python style check

b616c0e [HuJiayin] add python api

1f5a0ef [HuJiayin] add codegen

7e0c604 [HuJiayin] Merge branch 'master' of https://github.com/apache/spark into initcap

6a0b958 [HuJiayin] add column

c79482d [HuJiayin] support soundex

7ce416b [HuJiayin] support initcap rebase code

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

This pull request adds a sortedIterator method to UnsafeFixedWidthAggregationMap that sorts its data in-place by the grouping key.

This is needed so we can fallback to external sorting for aggregation.

Author: Reynold Xin <rxin@databricks.com>

Closes #7849 from rxin/bytes2bytes-sorting and squashes the following commits:

75018c6 [Reynold Xin] Updated documentation.

81a8694 [Reynold Xin] [SPARK-9520][SQL] Support in-place sort in UnsafeFixedWidthAggregationMap.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This is based on #7641, thanks to zhichao-li

Closes #7641

Author: zhichao.li <zhichao.li@intel.com>

Author: Davies Liu <davies@databricks.com>

Closes #7848 from davies/substr and squashes the following commits:

461b709 [Davies Liu] remove bytearry from tests

b45377a [Davies Liu] Merge branch 'master' of github.com:apache/spark into substr

01d795e [zhichao.li] scala style

99aa130 [zhichao.li] add substring to dataframe

4f68bfe [zhichao.li] add binary type support for substring

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

UnsafeExternalSorter

BytesToBytesMap current encodes key/value data in the following format:

```

8B key length, key data, 8B value length, value data

```

UnsafeExternalSorter, on the other hand, encodes data this way:

```

4B record length, data

```

As a result, we cannot pass records encoded by BytesToBytesMap directly into UnsafeExternalSorter for sorting. However, if we rearrange data slightly, we can then pass the key/value records directly into UnsafeExternalSorter:

```

4B key+value length, 4B key length, key data, value data

```

Author: Reynold Xin <rxin@databricks.com>

Closes #7845 from rxin/kvsort-rebase and squashes the following commits:

5716b59 [Reynold Xin] Fixed test.

2e62ccb [Reynold Xin] Updated BytesToBytesMap's data encoding to put the key first.

a51b641 [Reynold Xin] Added a KV sorter interface.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR is based on the original work by JoshRosen in #7780, which adds ScalaCheck property-based tests for UTF8String.

Author: Josh Rosen <joshrosen@databricks.com>

Author: Yijie Shen <henry.yijieshen@gmail.com>

Closes #7830 from yjshen/utf8-property-checks and squashes the following commits:

593da3a [Yijie Shen] resolve comments

c0800e6 [Yijie Shen] Finish all todos in suite

52f51a0 [Josh Rosen] Add some more failing tests

49ed0697 [Josh Rosen] Rename suite

9209c64 [Josh Rosen] UTF8String Property Checks.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR is based on #7533 , thanks to zhichao-li

Closes #7533

Author: zhichao.li <zhichao.li@intel.com>

Author: Davies Liu <davies@databricks.com>

Closes #7843 from davies/str_index and squashes the following commits:

391347b [Davies Liu] add python api

3ce7802 [Davies Liu] fix substringIndex

f2d29a1 [Davies Liu] Merge branch 'master' of github.com:apache/spark into str_index

515519b [zhichao.li] add foldable and remove null checking

9546991 [zhichao.li] scala style

67c253a [zhichao.li] hide some apis and clean code

b19b013 [zhichao.li] add codegen and clean code

ac863e9 [zhichao.li] reduce the calling of numChars

12e108f [zhichao.li] refine unittest

d92951b [zhichao.li] add lastIndexOf

52d7b03 [zhichao.li] add substring_index function

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

BytesToBytesMap & integrate with ShuffleMemoryManager

This patch adds support for entries larger than the default page size in BytesToBytesMap. These large rows are handled by allocating special overflow pages to hold individual entries.

In addition, this patch integrates BytesToBytesMap with the ShuffleMemoryManager:

- Move BytesToBytesMap from `unsafe` to `core` so that it can import `ShuffleMemoryManager`.

- Before allocating new data pages, ask the ShuffleMemoryManager to reserve the memory:

- `putNewKey()` now returns a boolean to indicate whether the insert succeeded or failed due to a lack of memory. The caller can use this value to respond to the memory pressure (e.g. by spilling).

- `UnsafeFixedWidthAggregationMap. getAggregationBuffer()` now returns `null` to signal failure due to a lack of memory.

- Updated all uses of these classes to handle these error conditions.

- Added new tests for allocating large records and for allocations which fail due to memory pressure.

- Extended the `afterAll()` test teardown methods to detect ShuffleMemoryManager leaks.

Author: Josh Rosen <joshrosen@databricks.com>

Closes #7762 from JoshRosen/large-rows and squashes the following commits:

ae7bc56 [Josh Rosen] Fix compilation

82fc657 [Josh Rosen] Merge remote-tracking branch 'origin/master' into large-rows

34ab943 [Josh Rosen] Remove semi

31a525a [Josh Rosen] Integrate BytesToBytesMap with ShuffleMemoryManager.

626b33c [Josh Rosen] Move code to sql/core and spark/core packages so that ShuffleMemoryManager can be integrated

ec4484c [Josh Rosen] Move BytesToBytesMap from unsafe package to core.

642ed69 [Josh Rosen] Rename size to numElements

bea1152 [Josh Rosen] Add basic test.

2cd3570 [Josh Rosen] Remove accidental duplicated code

07ff9ef [Josh Rosen] Basic support for large rows in BytesToBytesMap.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR brings SQL function soundex(), see https://issues.apache.org/jira/browse/HIVE-9738

It's based on #7115 , thanks to HuJiayin

Author: HuJiayin <jiayin.hu@intel.com>

Author: Davies Liu <davies@databricks.com>

Closes #7812 from davies/soundex and squashes the following commits:

fa75941 [Davies Liu] Merge branch 'master' of github.com:apache/spark into soundex

a4bd6d8 [Davies Liu] fix soundex

2538908 [HuJiayin] add codegen soundex

d15d329 [HuJiayin] add back ut

ded1a14 [HuJiayin] Merge branch 'master' of https://github.com/apache/spark

e2dec2c [HuJiayin] support soundex rebase code

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Previously we could be getting garbage data if the number of bytes is 0, or on JVMs that are 4 byte aligned, or when compressedoops is on.

Author: Reynold Xin <rxin@databricks.com>

Closes #7789 from rxin/utf8string and squashes the following commits:

86ffa3e [Reynold Xin] Mask out data outside of valid range.

4d647ed [Reynold Xin] Mask out data.

c6e8794 [Reynold Xin] [SPARK-9460] Fix prefix generation for UTF8String.

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

As of today, StringPrefixComparator converts the long values back to byte arrays in order to compare them. This patch optimizes this to compare the longs directly, rather than turning the longs into byte arrays and comparing them byte by byte (unsigned).

This only works on little-endian architecture right now.

Author: Reynold Xin <rxin@databricks.com>

Closes #7765 from rxin/SPARK-9460 and squashes the following commits:

e4908cc [Reynold Xin] Stricter randomized tests.

4c8d094 [Reynold Xin] [SPARK-9460] Avoid byte array allocation in StringPrefixComparator.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

We need to make page sizes configurable so we can reduce them in unit tests and increase them in real production workloads. These sizes are now controlled by a new configuration, `spark.buffer.pageSize`. The new default is 64 megabytes.

Author: Josh Rosen <joshrosen@databricks.com>

Closes #7741 from JoshRosen/SPARK-9411 and squashes the following commits:

a43c4db [Josh Rosen] Fix pow

2c0eefc [Josh Rosen] Fix MAXIMUM_PAGE_SIZE_BYTES comment + value

bccfb51 [Josh Rosen] Lower page size to 4MB in TestHive

ba54d4b [Josh Rosen] Make UnsafeExternalSorter's page size configurable

0045aa2 [Josh Rosen] Make UnsafeShuffle's page size configurable

bc734f0 [Josh Rosen] Rename configuration

e614858 [Josh Rosen] Makes BytesToBytesMap page size configurable

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

We want to introduce a new IntervalType in 1.6 that is based on only the number of microseoncds,

so interval can be compared.

Renaming the existing IntervalType to CalendarIntervalType so we can do that in the future.

Author: Reynold Xin <rxin@databricks.com>

Closes #7745 from rxin/calendarintervaltype and squashes the following commits:

99f64e8 [Reynold Xin] One more line ...

13466c8 [Reynold Xin] Fixed tests.

e20f24e [Reynold Xin] [SPARK-9430][SQL] Rename IntervalType to CalendarIntervalType.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

This is actually contains 3 minor issues:

1) Enable the unit test(codegen) for mutable expressions (FormatNumber, Regexp_Replace/Regexp_Extract)

2) Use the `PlatformDependent.copyMemory` instead of the `System.arrayCopy`

Author: Cheng Hao <hao.cheng@intel.com>

Closes #7566 from chenghao-intel/codegen_ut and squashes the following commits:

24f43ea [Cheng Hao] enable codegen for mutable expression & UTF8String performance

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

The two are redundant.

Once this patch is merged, I plan to remove the inbound conversions from unsafe aggregates.

Author: Reynold Xin <rxin@databricks.com>

Closes #7658 from rxin/unsafeconverters and squashes the following commits:

ed19e6c [Reynold Xin] Updated support types.

2a56d7e [Reynold Xin] [SPARK-9334][SQL] Remove UnsafeRowConverter in favor of UnsafeProjection.

|

| |

|

|

|

|

|

|

|

|

|

| |

Only a trial thing, not sure if I understand correctly or not but I guess only 2 entries in `bytesOfCodePointInUTF8` for the case of 6 bytes codepoint(1111110x) is enough.

Details can be found from https://en.wikipedia.org/wiki/UTF-8 in "Description" section.

Author: zhichao.li <zhichao.li@intel.com>

Closes #7582 from zhichao-li/utf8 and squashes the following commits:

8bddd01 [zhichao.li] two extra entries

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

This PR introduce unsafe version (using UnsafeRow) of HashJoin, HashOuterJoin and HashSemiJoin, including the broadcast one and shuffle one (except FullOuterJoin, which is better to be implemented using SortMergeJoin).

It use HashMap to store UnsafeRow right now, will change to use BytesToBytesMap for better performance (in another PR).

Author: Davies Liu <davies@databricks.com>

Closes #7480 from davies/unsafe_join and squashes the following commits:

6294b1e [Davies Liu] fix projection

10583f1 [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_join

dede020 [Davies Liu] fix test

84c9807 [Davies Liu] address comments

a05b4f6 [Davies Liu] support UnsafeRow in LeftSemiJoinBNL and BroadcastNestedLoopJoin

611d2ed [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_join

9481ae8 [Davies Liu] return UnsafeRow after join()

ca2b40f [Davies Liu] revert unrelated change

68f5cd9 [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_join

0f4380d [Davies Liu] ada a comment

69e38f5 [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_join

1a40f02 [Davies Liu] refactor

ab1690f [Davies Liu] address comments

60371f2 [Davies Liu] use UnsafeRow in SemiJoin

a6c0b7d [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_join

184b852 [Davies Liu] fix style

6acbb11 [Davies Liu] fix tests

95d0762 [Davies Liu] remove println

bea4a50 [Davies Liu] Unsafe HashJoin

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

https://issues.apache.org/jira/browse/SPARK-9157

Author: Tarek Auel <tarek.auel@googlemail.com>

Closes #7534 from tarekauel/SPARK-9157 and squashes the following commits:

e65e3e9 [Tarek Auel] [SPARK-9157] indent fix

44e89f8 [Tarek Auel] [SPARK-9157] use EMPTY_UTF8

37d54c4 [Tarek Auel] Merge branch 'master' into SPARK-9157

60732ea [Tarek Auel] [SPARK-9157] created substringSQL in UTF8String

18c3576 [Tarek Auel] [SPARK-9157][SQL] remove slice pos

1a2e611 [Tarek Auel] [SPARK-9157][SQL] codegen substring

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Jira: https://issues.apache.org/jira/browse/SPARK-9156

Author: Tarek Auel <tarek.auel@googlemail.com>

Closes #7547 from tarekauel/SPARK-9156 and squashes the following commits:

0be2700 [Tarek Auel] [SPARK-9156][SQL] indention fix

b860eaf [Tarek Auel] [SPARK-9156][SQL] codegen StringSplit

5ad6a1f [Tarek Auel] [SPARK-9156] codegen StringSplit

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Jira: https://issues.apache.org/jira/browse/SPARK-9178

In order to avoid calls of `UTF8String.fromString("")` this pr adds an `EMPTY_STRING` constant to `UTF8String`. An `UTF8String` is immutable, so we can use a constant, isn't it?

I searched for current usage of `UTF8String.fromString("")` with

`grep -R "UTF8String.fromString(\"\")" .`

Author: Tarek Auel <tarek.auel@googlemail.com>

Closes #7509 from tarekauel/SPARK-9178 and squashes the following commits:

8d6c405 [Tarek Auel] [SPARK-9178] revert intellij indents

3627b80 [Tarek Auel] [SPARK-9178] revert concat tests changes

3f5fbf5 [Tarek Auel] [SPARK-9178] rebase and add final to UTF8String.EMPTY_UTF8

47cda68 [Tarek Auel] Merge branch 'master' into SPARK-9178

4a37344 [Tarek Auel] [SPARK-9178] changed name to EMPTY_UTF8, added tests

748b87a [Tarek Auel] [SPARK-9178] Add empty string constant to UTF8String

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Jira https://issues.apache.org/jira/browse/SPARK-9155

Author: Tarek Auel <tarek.auel@googlemail.com>

Closes #7531 from tarekauel/SPARK-9155 and squashes the following commits:

423c426 [Tarek Auel] [SPARK-9155] language typo fix

e34bd1b [Tarek Auel] [SPARK-9155] moved creation of blank string to UTF8String

4bc33e6 [Tarek Auel] [SPARK-9155] codegen StringSpace

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

I also changed the semantics of concat w.r.t. null back to the same behavior as Hive.

That is to say, concat now returns null if any input is null.

Author: Reynold Xin <rxin@databricks.com>

Closes #7504 from rxin/concat_ws and squashes the following commits:

83fd950 [Reynold Xin] Fixed type casting.

3ae85f7 [Reynold Xin] Write null better.

cdc7be6 [Reynold Xin] Added code generation for pure string mode.

a61c4e4 [Reynold Xin] Updated comments.

2d51406 [Reynold Xin] [SPARK-8241][SQL] string function: concat_ws.

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

Author: Reynold Xin <rxin@databricks.com>

Closes #7486 from rxin/concat and squashes the following commits:

5217d6e [Reynold Xin] Removed Hive's concat test.

f5cb7a3 [Reynold Xin] Concat is never nullable.

ae4e61f [Reynold Xin] Removed extra import.

fddcbbd [Reynold Xin] Fixed NPE.

22e831c [Reynold Xin] Added missing file.

57a2352 [Reynold Xin] [SPARK-8240][SQL] string function: concat

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

JIRA: https://issues.apache.org/jira/browse/SPARK-8945

Add add and subtract expressions for IntervalType.

Author: Liang-Chi Hsieh <viirya@appier.com>

This patch had conflicts when merged, resolved by

Committer: Reynold Xin <rxin@databricks.com>

Closes #7398 from viirya/interval_add_subtract and squashes the following commits:

acd1f1e [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into interval_add_subtract

5abae28 [Liang-Chi Hsieh] For comments.

6f5b72e [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into interval_add_subtract

dbe3906 [Liang-Chi Hsieh] For comments.

13a2fc5 [Liang-Chi Hsieh] Merge remote-tracking branch 'upstream/master' into interval_add_subtract

83ec129 [Liang-Chi Hsieh] Remove intervalMethod.

acfe1ab [Liang-Chi Hsieh] Fix scala style.

d3e9d0e [Liang-Chi Hsieh] Add add and subtract expressions for IntervalType.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

This is a minor fixing for #7355 to allow spaces in the beginning and ending of string parsed to `Interval`.

Author: Liang-Chi Hsieh <viirya@appier.com>

Closes #7390 from viirya/fix_interval_string and squashes the following commits:

9eb6831 [Liang-Chi Hsieh] Use trim instead of modifying regex.

57861f7 [Liang-Chi Hsieh] Fix scala style.

815a9cb [Liang-Chi Hsieh] Slightly modify regex to allow spaces in the beginning and ending of string.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

These are minor corrections in the documentation of several classes that are preventing:

```bash

build/sbt publish-local

```

I believe this might be an issue associated with running JDK8 as ankurdave does not appear to have this issue in JDK7.

Author: Joseph Gonzalez <joseph.e.gonzalez@gmail.com>

Closes #7354 from jegonzal/FixingJavadocErrors and squashes the following commits:

6664b7e [Joseph Gonzalez] making requested changes

2e16d89 [Joseph Gonzalez] Fixing errors in javadocs that prevents build/sbt publish-local from completing.

|

| |

|

|

|

|

|

|

|

|

|

| |

Author: Wenchen Fan <cloud0fan@outlook.com>

Closes #7355 from cloud-fan/fromString and squashes the following commits:

3bbb9d6 [Wenchen Fan] fix code gen

7dab957 [Wenchen Fan] naming fix

0fbbe19 [Wenchen Fan] address comments

ac1f3d1 [Wenchen Fan] Support casting between IntervalType and StringType

|

| |

|

|

|

|

|

|

|

|

|

| |

[SPARK-8258] [SPARK-8259] [SPARK-8261] [SPARK-8262] [SPARK-8253] [SPARK-8260] [SPARK-8267] [SQL] Add String Expressions

Author: Cheng Hao <hao.cheng@intel.com>

Closes #6762 from chenghao-intel/str_funcs and squashes the following commits:

b09a909 [Cheng Hao] update the code as feedback

7ebbf4c [Cheng Hao] Add more string expressions

|

| |

|

|

|

|

|

|

| |

Author: Wenchen Fan <cloud0fan@outlook.com>

Closes #7315 from cloud-fan/toString and squashes the following commits:

4fc8d80 [Wenchen Fan] Implement toString for Interval data type

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Jira: https://issues.apache.org/jira/browse/SPARK-8830

rxin and HuJiayin can you have a look on it.

Author: Tarek Auel <tarek.auel@googlemail.com>

Closes #7236 from tarekauel/native-levenshtein-distance and squashes the following commits:

ee4c4de [Tarek Auel] [SPARK-8830] implemented improvement proposals

c252e71 [Tarek Auel] [SPARK-8830] removed chartAt; use unsafe method for byte array comparison

ddf2222 [Tarek Auel] Merge branch 'master' into native-levenshtein-distance

179920a [Tarek Auel] [SPARK-8830] added description

5e9ed54 [Tarek Auel] [SPARK-8830] removed StringUtils import

dce4308 [Tarek Auel] [SPARK-8830] native levenshtein distance

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

We need a new data type to represent time intervals. Because we can't determine how many days in a month, so we need 2 values for interval: a int `months`, a long `microseconds`.

The interval literal syntax looks like:

`interval 3 years -4 month 4 weeks 3 second`

Because we use number of 100ns as value of `TimestampType`, so it may not makes sense to support nano second unit.

Author: Wenchen Fan <cloud0fan@outlook.com>

Closes #7226 from cloud-fan/interval and squashes the following commits:

632062d [Wenchen Fan] address comments

ac348c3 [Wenchen Fan] use case class

0342d2e [Wenchen Fan] use array byte

df9256c [Wenchen Fan] fix style

fd6f18a [Wenchen Fan] address comments

1856af3 [Wenchen Fan] support interval type

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Let UTF8String work with binary buffer. Before we have better idea on manage the lifecycle of UTF8String in Row, we still do the copy when calling `UnsafeRow.get()` for StringType.

cc rxin JoshRosen

Author: Davies Liu <davies@databricks.com>

Closes #7197 from davies/unsafe_string and squashes the following commits:

51b0ea0 [Davies Liu] fix test

50c1ebf [Davies Liu] remove optimization for upper/lower case

315d491 [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_string

93fce17 [Davies Liu] address comment

e9ff7ba [Davies Liu] clean up

67ec266 [Davies Liu] fix bug

7b74b1f [Davies Liu] fallback to String if local dependent

ab7857c [Davies Liu] address comments

7da92f5 [Davies Liu] handle local in toUpperCase/toLowerCase

59dbb23 [Davies Liu] revert python change

d1e0716 [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_string

002e35f [Davies Liu] rollback hashCode change

a87b7a8 [Davies Liu] improve toLowerCase and toUpperCase

76e794a [Davies Liu] fix test

8b2d5ce [Davies Liu] fix tests

fd3f0a6 [Davies Liu] bug fix

c4e9c88 [Davies Liu] Merge branch 'master' of github.com:apache/spark into unsafe_string

c45d921 [Davies Liu] address comments

175405f [Davies Liu] unsafe UTF8String

|