diff options

| author | zhichao.li <zhichao.li@intel.com> | 2015-08-06 09:02:30 -0700 |

|---|---|---|

| committer | Davies Liu <davies.liu@gmail.com> | 2015-08-06 09:02:30 -0700 |

| commit | aead18ffca36830e854fba32a1cac11a0b2e31d5 (patch) | |

| tree | 172fe1d83e6c691abad40c4114662db84fe8fbf2 /python/pyspark/sql/functions.py | |

| parent | d5a9af3230925c347d0904fe7f2402e468e80bc8 (diff) | |

| download | spark-aead18ffca36830e854fba32a1cac11a0b2e31d5.tar.gz spark-aead18ffca36830e854fba32a1cac11a0b2e31d5.tar.bz2 spark-aead18ffca36830e854fba32a1cac11a0b2e31d5.zip | |

[SPARK-8266] [SQL] add function translate

Author: zhichao.li <zhichao.li@intel.com>

Closes #7709 from zhichao-li/translate and squashes the following commits:

9418088 [zhichao.li] refine checking condition

f2ab77a [zhichao.li] clone string

9d88f2d [zhichao.li] fix indent

6aa2962 [zhichao.li] style

e575ead [zhichao.li] add python api

9d4bab0 [zhichao.li] add special case for fodable and refactor unittest

eda7ad6 [zhichao.li] update to use TernaryExpression

cdfd4be [zhichao.li] add function translate

Diffstat (limited to 'python/pyspark/sql/functions.py')

| -rw-r--r-- | python/pyspark/sql/functions.py | 16 |

1 files changed, 16 insertions, 0 deletions

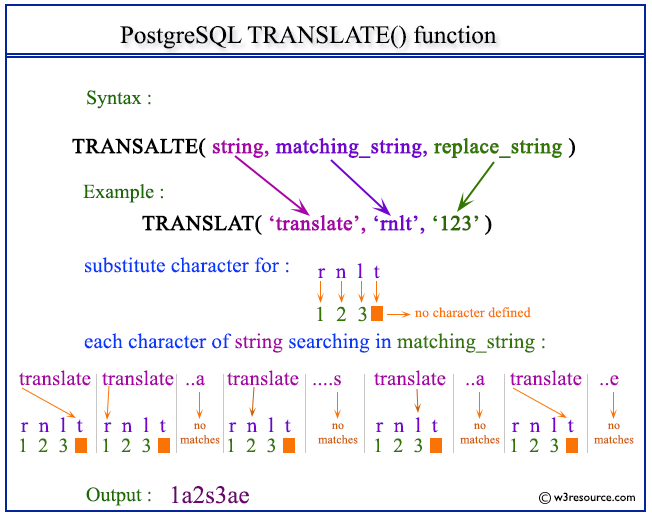

diff --git a/python/pyspark/sql/functions.py b/python/pyspark/sql/functions.py index 9f0d71d796..b5c6a01f18 100644 --- a/python/pyspark/sql/functions.py +++ b/python/pyspark/sql/functions.py @@ -1290,6 +1290,22 @@ def length(col): return Column(sc._jvm.functions.length(_to_java_column(col))) +@ignore_unicode_prefix +@since(1.5) +def translate(srcCol, matching, replace): + """A function translate any character in the `srcCol` by a character in `matching`. + The characters in `replace` is corresponding to the characters in `matching`. + The translate will happen when any character in the string matching with the character + in the `matching`. + + >>> sqlContext.createDataFrame([('translate',)], ['a']).select(translate('a', "rnlt", "123")\ + .alias('r')).collect() + [Row(r=u'1a2s3ae')] + """ + sc = SparkContext._active_spark_context + return Column(sc._jvm.functions.translate(_to_java_column(srcCol), matching, replace)) + + # ---------------------- Collection functions ------------------------------ @since(1.4) |